Foreword

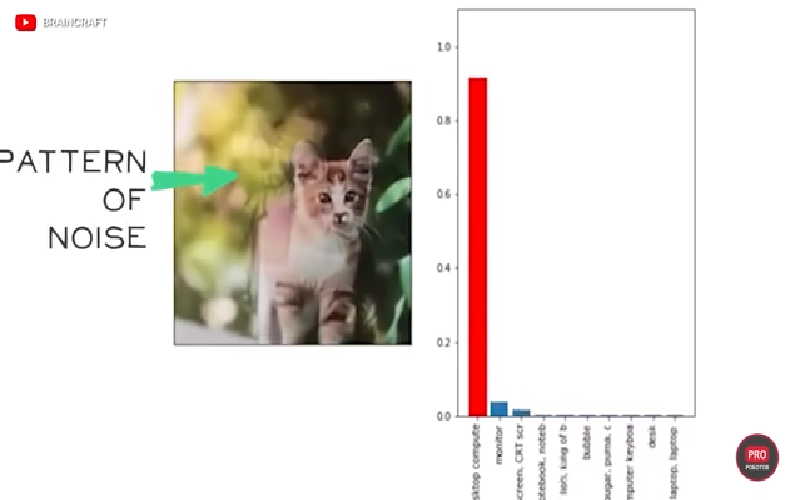

Deep learning models have been shown to be effective in a variety of tasks. However, these models are often opaque and lack the ability to use symbolic knowledge. In this paper, we propose a semantic loss function that can be used to train deep learning models with symbolic knowledge. This loss function is based on the idea of maximal information compression, and it can be used to improve the interpretability of deep learning models.

A semantic loss function is a function that assigns a meaning to a symbol or group of symbols. In the context of deep learning, a semantic loss function can be used to help a neural network learn the meaning of a symbol by mapping it to a real-world object or concept.

Which loss function is used for deep learning?

The most popular loss functions for deep learning classification models are binary cross-entropy and sparse categorical cross-entropy. Binary cross-entropy is useful for binary and multilabel classification problems. Sparse categorical cross-entropy is useful for multiclass classification problems with a large number of classes.

A loss function is a function that compares the target and predicted output values; measures how well the neural network models the training data. When training, we aim to minimize this loss between the predicted and target outputs.

Which loss function is used for deep learning?

Our semantic loss function is derived from first principles and captures how close the neural network is to satisfying the constraints on its output. This loss function bridges between neural output vectors and logical constraints, allowing us to train our models more effectively.

There is no single best loss function to use for all problems. Different loss functions are better suited for different types of problems. For example, the cross-entropy loss is typically used for classification problems, while the mean-squared error is used for regression problems. The huber loss and the hinge loss are both used for problems with outliers.

What is L1 loss function in deep learning?

L1 loss is a common loss function used in machine learning. It is used to minimize the error which is the sum of all the absolute differences in between the true value and the predicted value. L1 loss is also known as the Absolute Error and the cost is the Mean of these Absolute Errors (MAE).

Loss functions are a key part of any machine learning algorithm. The loss function is used to measure how well the algorithm is performing and to make adjustments to the model. There are a number of different loss functions that can be used, each with its own advantages and disadvantages.

Mean Squared Error (MSE) is one of the most commonly used loss functions. MSE measures the average of the squared difference between the predicted and actual values. MSE is easy to understand and to compute, but can be sensitive to outliers.

See also What is deep learning framework?

Mean Squared Logarithmic Error (MSLE) is another common loss function. MSLE is similar to MSE, but takes the logarithm of the predicted and actual values before computing the difference. This can help to reduce the effect of outliers.

Mean Absolute Error (MAE) is another loss function that is similar to MSE. MAE measures the average of the absolute difference between the predicted and actual values. MAE is less sensitive to outliers than MSE.

Binary Cross-Entropy is a loss function that is used for binary classification problems. Binary cross-entropy measures the difference between the predicted and actual values for each class

What is L1 L2 loss function?

Loss functions are used to penalize learner for getting bad results. The most commonly used loss functions are least absolute deviations (L1) and least square errors (L2). Both the loss functions are different and the choice of which function to use while learning from a dataset depends on the type of data and the model.

L2 loss function is the sum of the squared differences between the estimated and existing target values. This function is easy to minimize and has good properties like being differentiable. However, L2 loss function is sensitive to outliers and can lead to overfitting.

L1 loss function is the sum of the absolute differences between the estimated and existing target values. This function is not differentiable but is robust to outliers. L1 loss function is also known as least absolute deviations (LAD) and is used in robust regression.

A loss function is a mathematical function that calculates the error between predicted values and actual values. Loss functions are used in conjunction with training data to improve the accuracy of predictions made by a machine learning model.

Available loss functions vary by ML algorithm, but some common ones include the mean squared error (MSE), cross-entropy, and hinge loss. The choice of loss function often depends on the type of data being modeled and the type of prediction being made (regression or classification).

Loss functions are an important part of machine learning because they provide a way to measure how well a model is performing. Without a loss function, it would be difficult to determine whether a model is improving or not.

Is ReLU a loss function

The rectified linear activation function (ReLU) is a piecewise linear function that outputs the input directly if it is positive, and outputs zero otherwise. ReLU is the most commonly used activation function in deep learning.

Semantics is the study of the meaning of linguistic expressions. It can be broken down into the following three subcategories:

See also What is mfcc in speech recognition?

Formal semantics is the study of grammatical meaning in natural language. This includes the study of sentence structure, word order, and other aspects of grammar that contribute to meaning.

Conceptual semantics is the study of words at their core. This includes the study of the meanings of basic concepts like “time”, “space”, and “cause and effect”.

Lexical semantics is the study of word meaning. This includes the study of how words are organized in a language’s lexicon, and how the meanings of these words can change in different contexts.

What is an example of semantic learning?

Semantic memory is a type of long-term memory that stores general knowledge about the world. This includes knowledge about concepts, ideas, and facts. Examples of semantic memory include remembering that Washington, DC, is the US capital, Washington is a state, April 1564 is the date on which Shakespeare was born, and knowing that elephants and giraffes are both mammals.

Formal semantics is the study of the meaning of a text or utterance in terms of the structures that make it up. This includes an investigation of the relationships between the parts of the utterance, and how those relationships contribute to the overall meaning.

Lexical semantics is the study of the meaning of individual words. This includes an investigation of the relationships between words, and how those relationships contribute to the overall meaning of a text or utterance.

Conceptual semantics is the study of the meaning of a text or utterance in terms of the concepts that it is based on. This includes an investigation of the relationships between the concepts that make up the utterance, and how those relationships contribute to the overall meaning.

What are the four types of loss

No matter what the circumstances, experiencing a loss is always difficult. Whether it’s the death of a loved one, the end of a friendship, or something else, loss is always hard to deal with. Here are a few different types of loss that can be particularly difficult to cope with:

The death of a close friend: Losing a close friend is one of the most difficult things you can go through. It’s hard to say goodbye to someone you care about so much, and the grief can be overwhelming. If you’re struggling to cope with the loss of a close friend, it’s important to reach out for support from other friends and family members.

The death of a partner: Losing a partner is one of the most devastating things that can happen. If you’re struggling to cope with the loss of your partner, it’s important to reach out for support from other loved ones. It’s also important to give yourself time to grieve and to remember the good times you shared together.

See also Why does deep learning work?

The death of a classmate or colleague: Losing a classmate or colleague can be difficult, especially if you were close. It’s important to reach out for support from other friends and family members. It’s also important to remember the good times

The two main types of loss functions used when training neural network models are cross-entropy and mean squared error. Cross-entropy is generally used for classification tasks, while mean squared error is used for regression tasks.

What are the different loss function neural network?

There are various loss functions that can be used in neural networks. The most common ones are the mean absolute error (L1 loss), the mean squared error (L2 loss), the Huber loss, the cross-entropy (aka log loss), the relative entropy (aka Kullback-Leibler divergence) and the squared hinge.

L1 regularization is a type of penalty term that is added to the loss function in order to prevent overfitting. This term is also known as a lasso regression. L2 regularization is another type of penalty term that is added to the loss function, but it is used to prevent underfitting. This term is also known as a ridge regression.

What does L1 and L2 do

It is important to distinguish between a person’s first language (L1) and second language (L2), as this can have a significant impact on language teaching. L1 is often the language that a person is most comfortable with and has the most experience with, while L2 is the language that they are currently learning. This can influence how a person learns and uses language, so it is important to keep this in mind when teaching.

L1 and L2 regularization are two ways of penalizing the weights of a model in order to avoid overfitting.

L1 regularization penalizes the sum of absolute values of the weights, while L2 regularization penalizes the sum of squares of the weights. This means that L1 regularization will lead to a sparser model (fewer non-zero weights), while L2 regularization will lead to a denser model (more non-zero weights).

Which of these two methods is better ultimately depends on the dataset and the model being used. In general, though, L2 regularization is more common.

Final Words

There is currently no agreed upon answer for this question.

The proposed semantic loss function can effectively deep learning with symbolic knowledge and improve the performance of the deep learning models. The loss function can be used to learn the embeddings of the symbols and can be applied to different tasks such as image classification, object detection, and semantic segmentation.